Surgical robots and robot surgeons

Dr Paula Gomes, Cambridge Consultants

04 April 2013

Robots have established a foothold in surgery but what role will they play 20 years from now? Two decades ago, autonomous surgical robots were perceived as potential replacements for surgeons, raising the question of who is in control of the procedure — surgeon or device.

Most current surgical robots are teleoperated by surgeons from a console. The purpose of these master/slave robots is to provide superior ergonomics to the surgeon, expected to result in superior clinical outcomes for the patient. But new research points to very different robotic surgical devices in the future — micro and nano devices. How will surgeons interact with them? Can we expect to see surgical robots-on-a-chip and surgery taking place outside operating rooms and clinical environments without surgeon’s interaction?

Autonomous surgical robots

The motivation behind earlier surgical robots was their industrial counterparts. The premise was to use robotics to speed up and standardise procedures, and to carry out repetitive tasks with no or minimal intervention from the surgeon.

In 1991, the Probot (Imperial College London, UK), a

special-purpose device designed to have a small cone-shaped working

volume and hence to be inherently safe, was used to autonomously

remove tissue from a patient’s prostate for the first time. Soon

after, in 1992, ROBODOC (Curexo Technology Corporation, Fremont,

CA, USA), which is still in use today, became the first autonomous

robot to be used on humans in the USA, in total hip arthroplasty.

ROBODOC (Figure on right) used a customised version of an industrial 5

degree of freedom SCARA robot as its core.

In 1991, the Probot (Imperial College London, UK), a

special-purpose device designed to have a small cone-shaped working

volume and hence to be inherently safe, was used to autonomously

remove tissue from a patient’s prostate for the first time. Soon

after, in 1992, ROBODOC (Curexo Technology Corporation, Fremont,

CA, USA), which is still in use today, became the first autonomous

robot to be used on humans in the USA, in total hip arthroplasty.

ROBODOC (Figure on right) used a customised version of an industrial 5

degree of freedom SCARA robot as its core.

During these procedures, the surgeon, after setting up the

parameters for the robot’s operation, stood back, with a finger

hovering over the emergency button, whilst the robot carried out

tissue removal.

Surgeons and public have not universally

embraced the paradigm of robots that replace surgeons, even if some

systems have found widespread acceptance, eg CyberKnife (Accuray,

Sunnyvale, CA, USA), and others, eg ARTAS (Restoration Robotics,

San Jose, CA, USA), using this principle are still being developed

and entering the market.

The CyberKnife radiosurgery system relies on a KUKA industrial

robot to target radiation to treat tumours, meeting demanding

requirements for accuracy (Figure below). ARTAS exemplifies the potential

benefits brought about by industrial robots’ speed and repeatability

characteristics. This device harvests hair follicles for hair

transplants and was used in the UK for the first time in February

2013.

Despite autonomous devices posing a question of who is in

control of the surgery - surgeon or device - perceived advantages of

accuracy, repeatability and speed translate into clinical benefits

in some niche applications.

Teleoperated surgical robots

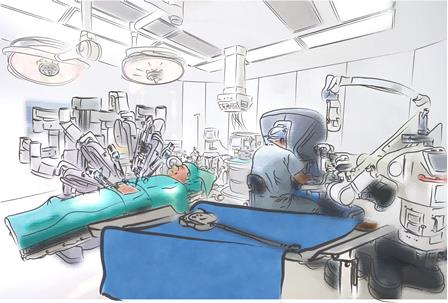

The surgical robotics sector of today is dominated by Intuitive Surgical’s da Vinci teleoperated device for laparoscopic procedures, cleared by the FDA in 1997 (Figure below). Intuitive Surgical is the market leader and has been the sole player in this space for nearly two decades.

The intention behind teleoperated robotic devices is to improve surgeon comfort and capabilities through better ergonomics and superior visualisation; this enables surgeons to convert open procedures into laparoscopic minimally-invasive operations, deemed better for the patient.

In current teleoperated systems, the surgeon operates from a console located a few metres from the patient, using joysticks, buttons and foot pedals to control the robot arms which move the instrumentation to perform surgery on the patient. da Vinci has a ‘closed console’ where the surgeon is immersed (Figure above).

Infrared sensors are provided as a safety feature. The robot arms do not move, even if the surgeon moves the joysticks, unless the presence of the surgeon’s head in the console is detected. This prevents involuntary movement of the surgical instruments when the surgeon is not looking at the endoscopic view displayed on the console’s screen.

Inspired by Intuitive Surgical’s commercial success, other companies are entering this space with products embracing the same principles but incorporating additional features to improve the surgeon-robot interface. Examples include DLR’s (German Aerospace Centre, Munich, Germany) MiroSurge research system which favours an open console to better integrate the surgeon with all the activity in the operating room, and haptics to return touch feedback to the surgeon (Figure below); and SOFAR’s (Milan, Italy) Telelap ALF-X for endoscopic interventions, CE-marked in 2011, which uses eye tracking to enable the surgeon to activate the various instruments by merely looking at their respective icons on the screen.

MiroSurge command devices for the surgeon.

©2013 DLR.

In current systems, the surgeon operates away from the patient in a non-sterile environment. A potentially interesting development is to have a sterile console which would allow the surgeon to move between console and patient without compromising the sterile field. Eye-tracking and touchless technologies are features that may contribute to this.

A variant of teleoperated master/slave systems is

that where the surgeon physically holds on to the robot and moves

it, with the position of the end effector being constrained to

programmable volumes in space. Examples in use include Stanmore’s

(UK) Sculptor RGA (Figure right) and MAKO Surgical’s (Fort Lauderdale,

FL, USA) RIO, both for orthopaedic joint replacement. This hands-on

approach means the surgeon continues to be fully immersed in the

sterile field rather than operating from a console away from the

patient. Draping procedures and techniques, and mechanical buffers

to preserve sterilisation, are of paramount importance.

A variant of teleoperated master/slave systems is

that where the surgeon physically holds on to the robot and moves

it, with the position of the end effector being constrained to

programmable volumes in space. Examples in use include Stanmore’s

(UK) Sculptor RGA (Figure right) and MAKO Surgical’s (Fort Lauderdale,

FL, USA) RIO, both for orthopaedic joint replacement. This hands-on

approach means the surgeon continues to be fully immersed in the

sterile field rather than operating from a console away from the

patient. Draping procedures and techniques, and mechanical buffers

to preserve sterilisation, are of paramount importance.

Surgical robots as surgical assistants

When the FDA cleared Computer Motion’s ZEUS Robotic Surgical System in 1994, a robotic device for cardiovascular interventions and a precursor of the da Vinci of today, ZEUS incorporated three robotic arms, which were controlled remotely by the surgeon.

Two robotic arms acted like extensions of the surgeon’s arms, following the surgeon’s movements whilst allowing for more precise executions by scaling down movements and eliminating tremors resulting from fatigue. The third arm was a voice-activated endoscope named AESOP (Automated Endoscopic System for Optimal Positioning).

AESOP's function was to manipulate a video camera inside the patient according to voice controls provided by the surgeon. AESOP eliminated the need for a member of the surgical team to hold the endoscope and allowed the surgeon to directly and precisely control their operative field of view, providing a steady picture during minimally invasive surgeries.

NASA-funded research determined that voice-controlled commands are preferred in the operating room as opposed to alternatives such as eye-tracking and head-tracking. However, patents on voice-controlled robotic devices forced competitors to develop other means for a surgeon to control endoscopic equipment. For instance, with the FreeHand device (Freehand 2010 Ltd, UK), the surgeon has hands-free control of the endoscope position through a head-band attached to a surgical cap, and an activation pedal.

Now that restricting patents are reaching the end of their life, will we see a surge of voice-controlled devices in the operating room? EndoControl (Grenoble, France) already has one: ViKY EP system is a motorised endoscope positioner for laparoscopic and thoracic surgeries. The system holds and moves the endoscope under direct surgeon control in one of two modes: voice-activated or with a foot control.

If voice is the best means of interacting with surgical equipment, when will we see virtual assistants in ORs who can interpret higher-level instructions than a simple “up” or “down” command? With medical devices adopting technologies developed for the consumer market, we can expect to see the medical industry develop interfaces similar to Siri on iPhone to allow surgeons to control equipment in a less prescriptive way by talking in free speech and also to allow the equipment to talk back, providing warnings and information relative to the patient and the procedure status.

20 years from now: robots-on-a-chip

A new wave of surgical robots, inspired by biological systems, is currently the focus of many research initiatives — with some devices being close to entering the market. One motivation has been the development of flexible robots as alternatives to the essentially rigid instruments currently in use.

Another, the development of endoluminal devices of a much smaller scale to operate internally to the body. At a miniature scale, research systems, such as those from the ARAKNES programme, University of Nebraska (USA) and Scuola Superiore Sant’Anna (Italy) are indications of what the future may hold. Wireless endoscopic diagnostics capsules have been in use for over 10 years and robotic capsules capable of delivering therapeutic action are in development.

Characteristics of biological systems, such as diversity, adaptability and autonomy, are influencing the development of very different surgical robots: micro and nano devices. These devices allow intervention at local — even cell — level, resulting in completely new procedures well beyond human capabilities.

In two decades from now, we can expect to see special-purpose robots entering and acting on the body, self-assembling inside the body, collaborating with other robots and dismantling once the therapeutic action has been carried out. These robots will harvest the body’s own systems for power and propulsion, and to promote healing and tissue regeneration.

How will the relation between surgeons and robots develop?

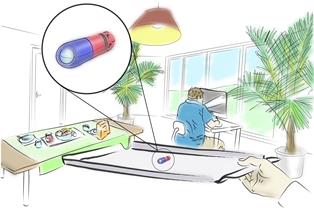

It seems feasible that surgery will one day be performed without surgeon intervention using natural orifices or minimal incisions as entry points and robotic micro and nano devices for the delivery of therapeutic action. This robotic surgery-on-a-chip will enable entirely new procedures and allow surgery to take place in a totally different environment from the current operating room or interventional suite.

A likely scenario for the future is that a multitude of robotic embodiments will co-exist, to be deployed depending on the context and specific circumstances. This will be a combination of external autonomous devices inspired by industrial robots, teleoperated systems inspired by aviation, and endoluminal untethered micro and nano devices inspired by biological systems. The way surgeons interact with them, and the environment where they will be deployed, will vary hugely (see below).

The surgical robot environment: from the OR to

the home

©2013 Cambridge Consultants

Whatever shape surgical robots take, they will need to have proven track records for indisputable patient outcomes and cost efficiencies in order to gain market traction, as well as strike the right balance of human and robot interaction.

Dr Paula Gomes, Cambridge Consultants

Dr Gomes leads developments of surgical and interventional medical devices at Cambridge Consultants.